Everyone today has probably heard of Node.js which is commonly used for handling large amounts of requests. It uses a single-threaded, event-based model, that runs blocking I/O operations asynchronously, keeping the main thread open and ready to handle more incoming requests. Microsoft’s IIS web server however, has a reputation for being slow and bulky.

There’s a good reason for it – by default, ASP.NET handles requests in a blocking manner – each request will take up a thread until it completes. If more requests come in than there are threads in the thread pool, they will be queued. But that’s not the only way to write .NET web code! ASP and .NET have supported async processing for a very long time now, but relatively few people use it in their code.

Since IIS is what we’re using here at Tipalti, we were curious, how does Node.js compare to IIS + .NET using the best async practices?

There are, of course, already several good posts floating around comparing Node.js and .NET. Here are some:

- http://mikaelkoskinen.net/post/asp-net-web-api-vs-node-js-benchmark-take-2

- http://www.salmanq.com/blog/net-and-node-js-performance-comparison/2013/03/

- https://gist.github.com/ilyaigpetrov/f6df3e6f825ae1b5c7e2

So why are we writing another one? Several reasons.

First, most of the existing posts are already several years old. Both frameworks have moved on since then, and we think that a new comparison is due.

Second, the comparisons are just not ‘real world’ enough. In a real use case, your code wouldn’t just be reading local file system files, or making web requests to a remote site (both operations with very high possible variance times that are difficult to control or predict). Your actual web server code would probably be doing some basic processing and running some queries against your database, which would be a separate machine in the same network.

Also, your ASP.NET site would probably be hosted on IIS and, not ran as a console application, with all the good or bad that entails.

So we decided to create a new benchmark, one which would better emulate a ‘real’ application. We decided to setup a database machine and 2 web APIs – one on Node.js and another based on WebAPI 2 hosted on IIS. Both APIs would get a request, and insert a new item to the database. In both cases, we tried to rely on the async features of the framework as much as possible. We stored fake ‘item’ objects in our database, each containing only a single GUID.

Lets dig in!

Hardware

The web servers are Amazon’s c3.xlarge servers with 4 logical processors and 8 GB of RAM. The windows server is Windows server 2012, with IIS 8 installed. The Node.js server is an Ubuntu server 14.04 .

The database runs on a m3.xlarge server – 15GB RAM and 4 CPUs. It’s an Ubuntu server as well.

For the database, we selected MongoDB. It’s fast, simple to use, is widespread, well supported and has official client libraries in both javascript and .NET, maintained by the database developers themselves. The database is installed on a single machine with all default settings.

.NET

The code is very simple. We have a single WebAPI controller with a single method. The method is marked as async – this tells the framework to release the request thread for other use until the awaited Task is completed, similarly to how Node.js works. We also use the InsertOneAsync function on the MongoDB client, to not block the thread during the actual connection and insert method.

Here is the .Net code:

using System;

using System.Collections.Generic;

using System.Configuration;

using System.Linq;

using System.Net;

using System.Net.Http;

using System.Threading;

using System.Threading.Tasks;

using System.Web.Http;

using MongoDB.Bson;

using MongoDB.Driver;

namespace AsyncTest.Controllers

{

public class TaskController : ApiController

{

[Route("Task")]

public async Task<string> Get()

{

string item = Guid.NewGuid().ToString("N");

await AddItem(item);

return "ok";

}

//singleton client

private static IMongoClient client = new MongoClient(ConfigurationManager.AppSettings["mongoConnection"]);

private async Task AddItem(string item)

{

var database = client.GetDatabase("items_db");

var collection = database.GetCollection<BsonDocument>("items");

var document = new BsonDocument { { "item_data", item } };

await collection.InsertOneAsync(document);

}

}

}

IIS

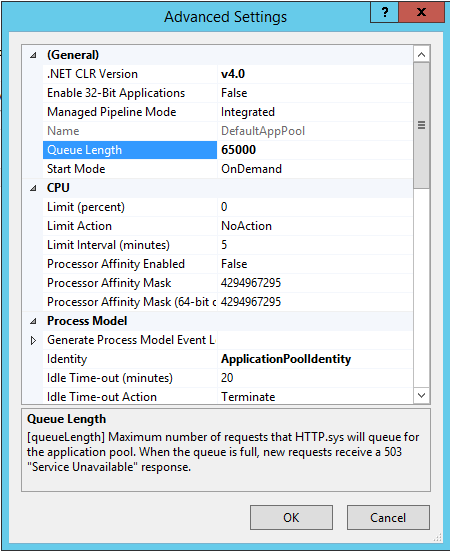

On the IIS side, we’ve increased the application pool’s concurrent request queue from 1000 to 65000. Other than that, no changes were made from default settings.

Node.js

We used Node.js v4.4.5 , the latest stable release at the time of this post. To connect to MongoDB we used the mongodb npm package, and the actual connection code is taken almost verbatim from the official MongoDB Node.js driver example, seen here

http://mongodb.github.io/node-mongodb-native/2.1/reference/ecmascript6/crud/#inserting-documents

We also use the Node.js cluster package, to make better use of the multiple cores of the machine.

Here is the Node.js code:

const http = require('http');

var MongoClient = require('mongodb').MongoClient;

var uuid = require('node-uuid');

var co = require('co');

const port = 80;

const url = 'mongodb://testmongodb/items_db';

const cluster = require('cluster');

const numCPUs = require('os').cpus().length;

if (cluster.isMaster) {

// Fork workers.

for (var i = 0; i < numCPUs; i++) {

cluster.fork();

}

cluster.on('exit', (worker, code, signal) => {

console.log(`worker ${worker.process.pid} died`);

});

} else {

MongoClient.connect(url, function(err,db){

var server = http.createServer(function(request, response) {

co(function*(){

var guid = uuid.v4();

// Insert a single document

var r = yield db.collection('items').insertOne({item:guid});

response.end('ok');

});

}).listen(port);

});

}

Client

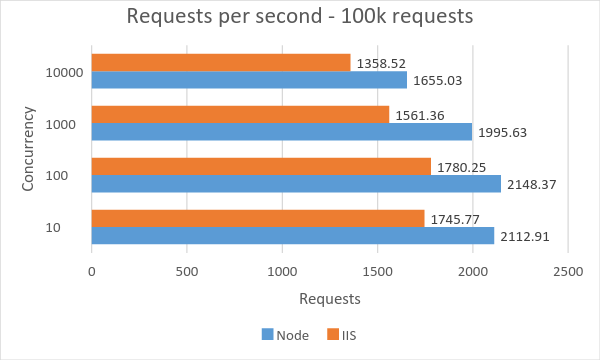

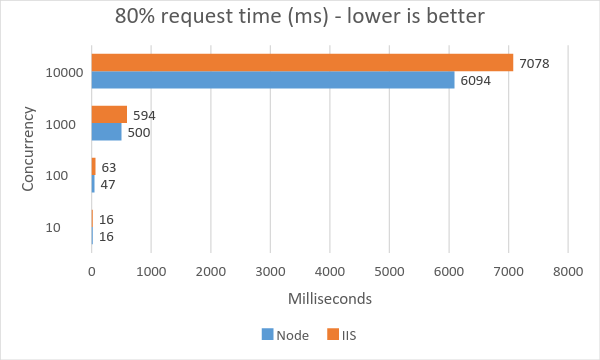

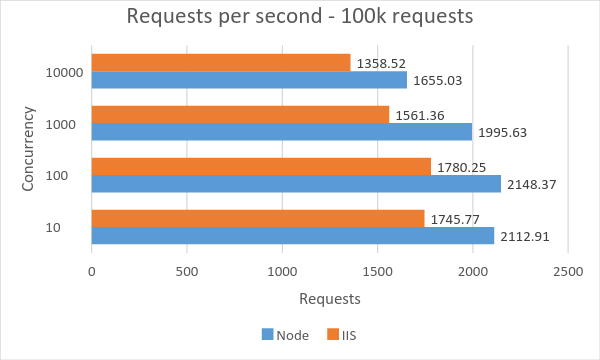

The testing was done from an external client, using Apache’s ab.exe tool. We ran 100k requests against the 2 endpoints in varying levels of concurrency, from 10 to 10000.

What were the performance differences we found?

The results are pretty consistent – Node.js is faster than IIS across all tests. The timing difference is also very consistent – Node.js is between 17% and 20% faster.

Both frameworks showed good resilience. There were no failures or dropped requests, and the time per request increased linearly with the increase of load – showing a good ability to scale. While Node.js is the clear winner here, WebAPI and IIS show very good performance as well.

Nice article!

One thing, you wrote: “If more requests come in than there are threads in the thread pool, they will be queued.” is contradictory to https://technet.microsoft.com/en-us/library/dd441171(v=office.13).aspx where is stated that it will result with 503. Can you please more explain what did you think?

Thanks!

LikeLike

Thanks!

When a request comes in and there aren’t available threads to process it, that request is added to a queue. But that queue is not infinite in size, and once it is full as well, then additional requests will start returning with a 503. You can see in the article, in the .Net setup section, that we are increasing the queue size to 65k, because the default limit of 1000 is too low for our test.

LikeLiked by 1 person

Hi,

Nice article. But, IIS is behind a proxy which support multiple web apps while node hosted as a single app (I assume). To be fair you should be using “http-proxy-middleware”? Right?

Thanks,

Susantha

LikeLike